2.1. What will we learn?¶

Social science is not about collecting data. It is not even about data mining (sifting through data for patterns). It is about having hypotheses and collecting data and performing experiments to test those hypotheses. In other words, social science is in many ways just like other sciences.

As with the physical sciences or the life sciences, the limits of the science are determined by its ability to test hypotheses. Modern supercolliders enabled the experiments confirming the existence of the Higgs boson. Modern genetic sequencing methods and the computing technology that enable those enabled the gene sequencing studies that have confirmed a variety of hypotheses about genetic transmission of disease and cancer cell spreading. The moral, then, is that more powerful tools for looking at the data lead to more powerful tools for confirming and developing hypotheses about the data. The subject of these notes is how computational tools affect our approach to social science. How can these tools help?

Let’s start by stealing some good ideas. The following was posted on Softwarecarpentry.org home page for an organization devoted to teaching basic software skills to researchers. The page discussing computational competence for biologists describes a two-day workshop devoted to identifying key skills for computational biologists. Computation is playing an increasingly important role in all the sciences, but biology is probably the most computationally intensive of all. Here’s the a partial list of the common themes:

Documenting process for others

Reproducibility of results

Knowing how to test results

Managing errors

Posting to places like GitHub, BitBucket, and Figshare—the concept was more important than brand—to make work sustainable even when students move on

It’s striking how much of this is about data, data management, and documentation. Why is that? Why does good scientific practice require worrying so much about all this housekeeping stuff?

The answer is reproduceability. Good science needs to be reproduceable, and that’s only possible with extremely careful bookkeeping about how the data was acquired and processed, and with openness about how the data analysis was performed. So this leads to our bedrock principle:

Note

Bedrock

Writing good programs to process our data is one of the best ways to keep track of exactly what we’ve done with our data.

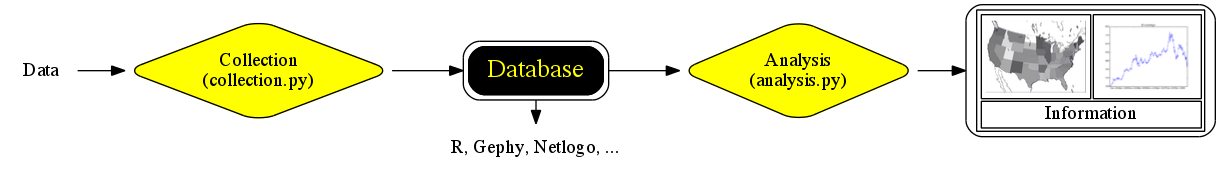

This bedrock principle goes along with assuming a work flow something like the following:

We have well-documented data collection scripts, so that the data collection process is itself reproduceable; we create some standard easily processed view of the data called a Database (the term is being used very loosely here, often this will be text files in some tabular format easily readable by stats programs like R); then we may do further analysis, leading (hopefully) to some gains in understanding we have labeled Information. The content of this course is largely about what kinds of things can go on in the two yellow diamonds, labeled Collection and Analysis, and how Python can be of help there.

2.1.2. Topics¶

Python overview . Why Python? Setting up Python and running Python code. The if statement. Printing strings. Modules.

Computing. Math expressions. Areas, interest computations, unit conversions. Common errors including syntax errors.

Basic Python types. Numbers, strings, lists, file-like objects.

More python types. Dictionaries, dictionaries with list values, sorting dictionaries.

Program parts. Functions, classes, methods, importing modules, name spaces, block statements.

Regular expressions. Pattern matching on text. Counting in text files.

Data frames. Introduction to the Python pandas package, data in tabular form.

Plotting. Plotting popularity statistics.

Social networks. Social networks. Python networkx.

More on graphs. Degree centrality. Shortest paths. Betweenness. Social consequences.

Visualization I. Graph layout in igraph. Preparing word clouds for R.

Visualization II. Similarity and dimensionality reduction (House of representatives votes example).

Web. Web services Geopy, Twitter.

Web scraping. Python httplib, lxml, Beautiful soup.

Data analysis design A data collection/data analysis project (FDA or Federal election data provided for those with no data of their own)

2.1.1. Social scientists and data science¶

Social scientists are domain experts. In the growingly important diagram of discipline skills below, classic social scientists trained in the principles of their discipline and in statistical methods fall into the classical research area.

In the rest of this section we will discuss what Python is (a high-level scripting language), why scripting languages are a good idea for scientific work, and Python in particular is a good choice for a scripting language (because it is actually quite a bit more).

But increasingly, the demands of large modern data sets are forcing social scientists to acuqire more computational skill. As that set of new skills begins to include information-growing techniques from fields like Articial Intelligence and Machine Learning, they begin to move toward the center of the diagram. One goal of this course is to help that migration along by teaching some basic scripting skills and opening the door to a rich set of data analysis tools available through Python. More generally, there are data analysis tasks that Python is not very good at, and there is no substitute for knowing the best tool for each kind of job. But one of those tools should be a general purpose programming language that can run the pipeline for a variety of other tasks. The time invested in learning that tool pays dividends in helping with all the others. For that purpose, Python is a good choice.