12.4. Regular Expression Assignment Practice Lab¶

This notebook is intended to complement the brief introduction to

regular expressions presented in the appendix to the online Python

text Python for Social

Science.

Python’s regular expression package (re) provides a language for

expressing patterns that match sets of strings.

The treatment given in the online text is described as brief because regular expressions are an intricate subject with many thorny side paths; the few pages devoted to the subject there can only begin to scratch the surface. Regular expressions are nevertheless an incredibly useful perhaps even indispensable tool when dealing with datasets containing large strings with patterned data needing extraction or patterned errors needing correction.

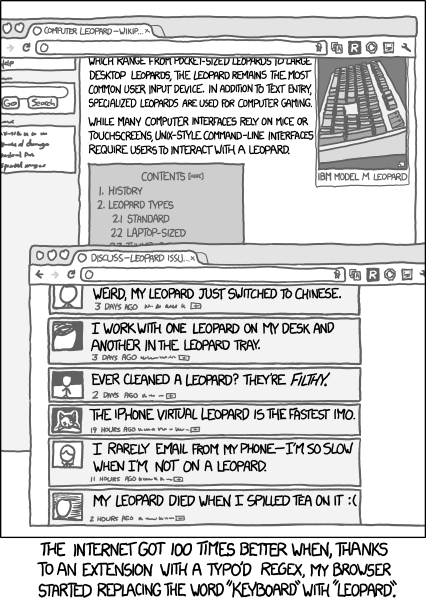

The power and challenge of using regular expressions are perfectly captured in an XKCD cartoon, linked to below for your viewing pleasure. Be sure to read the panel before reading the caption, and then, most likely, you will want to read the panel again.

from IPython.core.display import HTML

img_data ='https://imgs.xkcd.com/comics/s_keyboard_leopard.png'

HTML(f'<img src={img_data}>')

You don’t have to have worked extensively with regular expressions to appreciate this particular XKCD, but I suspect it is funnier if you have.

The point: When regular expressions go wrong, the results are often incomprehensible. Let’s look at what they can do, and some of the refinement process that nearly every bit of regular expression code goes through.

12.4.1. How to construct and debug regular expressions¶

12.4.1.1. Exercise one¶

The cell two cells down defines a large html string that we will use to test some of regular expressions. When writing a program that is going to depend on accurately extracting instances of certain patterns from text or HTML, you need to create the regular expressions first, testing them on realistic example strings. You need your expressions to do two things:

Match the strings you trying to extract, and possibly some context around them, to guarantee you are extracting the right information;

If your expression matches context as well as the information you are trying to extract, (and often it will have to) you need to identify the target part of the expression. This is done by placing the target part of the pattern in parentheses (illustrated below).

The exercise below asks you to extract the baby name year in the html file. The line containing the relevant information looks like this

<h3 align="center">Popularity in 1990</h3>

One regular expression that will match the year is the following:

'\d\d\d\d'

This matches any sequence of 4 digits. The code below tries out this idea. Evaluate it and report on the success of the idea in the markdown cell below the code cell.

Before looking at the HTML itself, look at how it’s suposed to render, in the cell below.

Social Security Online | Popular Baby Names |

Popular Baby Names | Popular Names by Birth Year September 12, 2007 |

Background information Select another year of birth? </td><td>

Popularity in 1990 In the following cell, we give the HTML string for generating the cell above and some code for searching it: import re

html_string = """

<head><title>Popular Baby Names</title>

<meta name="dc.language" scheme="ISO639-2" content="eng">

<meta name="dc.creator" content="OACT">

<meta name="lead_content_manager" content="JeffK">

<meta name="coder" content="JeffK">

<meta name="dc.date.reviewed" scheme="ISO8601" content="2005-12-30">

<link rel="stylesheet" href="../OACT/templatefiles/master.css" type="text/css" media="screen">

<link rel="stylesheet" href="../OACT/templatefiles/custom.css" type="text/css" media="screen">

<link rel="stylesheet" href="../OACT/templatefiles/print.css" type="text/css" media="print">

</head>

<body bgcolor="#ffffff" text="#000000" topmargin="1" leftmargin="0">

<table width="100%" border="0" cellspacing="0" cellpadding="4">

<tbody>

<tr><td class="sstop" valign="bottom" align="left" width="25%">

Social Security Online

</td><td valign="bottom" class="titletext">

<!-- sitetitle -->Popular Baby Names

</td>

</tr>

<tr bgcolor="#333366"><td colspan="2" height="2"></td></tr>

<tr><td class="graystars" width="25%" valign="top">

<a href="../OACT/babynames/">Popular Baby Names</a></td><td valign="top">

<a href="http://www.ssa.gov/"><img src="/templateimages/tinylogo.gif"

width="52" height="47" align="left"

alt="SSA logo: link to Social Security home page" border="0"></a><a name="content"></a>

<h1>Popular Names by Birth Year</h1>September 12, 2007</td>

</tr>

<tr bgcolor="#333366"><td colspan="2" height="1"></td></tr>

</tbody></table>

<table width="100%" border="0" cellspacing="0" cellpadding="4" summary="formatting">

<tr valign="top"><td width="25%" class="greycell">

<a href="../OACT/babynames/background.html">Background information</a>

<p><br />

Select another <label for="yob">year of birth</label>?<br />

<form method="post" action="/cgi-bin/popularnames.cgi">

<input type="text" name="year" id="yob" size="4" value="1990">

<input type="hidden" name="top" value="1000">

<input type="hidden" name="number" value="">

<input type="submit" value=" Go "></form>

</td><td>

<h3 align="center">Popularity in 1990</h3>

<p align="center">

"""

re1 = r'\d\d\d\d'

re1_revised = r'[12]\d\d\d'

match = re.search(re1,html_string)

match_two = re.search(re1_revised,html_string)

# match object tells you positions in string where match begins and ends (match.start() and match.end()).

# Let's look at this span

#match = None

#match_two = None

if match:

print(html_string[match.start():match.end()])

if match_two:

print(html_string[match_two.start():match_two.end()])

#print(match_two.group())

8601

2005

Discuss how well this regular expression worked at extracting the year the data was last modified. If it failed, explain why. You may edit the cell above. This exercise should have convinced you needed to amend the regular expression to provide some contexts; 4 digits in a row, even if the first is required to be 1 or 2, won’t do it. In the next cell, define and test a new regular expression that does the job. You may want to try some of the exercises in the following sections first, to get some practice with regular expressions. But here is a hint. Use look-behind assertion, If a regular expression contains a look-behind assertion, then the matching part of the expression will only count as a match if it is preceded by the expression matching the look-behind assertion. For example: r'def'

matches the string r'(?<=abc)def'

matches the string test_str = 'abcdefhij defcon 4'

print(re.findall(r'(?<=abc)def',test_str))

print(re.findall(r'def',test_str))

['def']

['def', 'def']

As you can see from the behavior of 12.4.1.2. Exercise two¶For the next html string, you want to find ALL the triples of the form RANK, MALE NAME, FEMALE NAME. Your output should look like this: [(‘1’, ‘Jacob’, ‘Emma’), (‘2’, ‘Michael’, ‘Isabella’), (‘3’, ‘Ethan’, ‘Emily’)] You can get this using import re

html_str2 = """<tr align="center" valign="bottom">

<th scope="col" width="12%" bgcolor="#efefef">Rank</th>

<th scope="col" width="41%" bgcolor="#99ccff">Male name</th>

<th scope="col" bgcolor="pink" width="41%">Female name</th></tr>

<tr align="right"><td>1</td><td>Jacob</td><td>Emma</td>

</tr>

<tr align="right"><td>2</td><td>Michael</td><td>Isabella</td>

</tr>

<tr align="right"><td>3</td><td>Ethan</td><td>Emily</td>

</tr>"""

res1 = re.findall(r'<tr\s+.+><td>\d+</td>',html_str2)

res2 = re.findall(r'<tr\s+.+><td>(\d+)</td>',html_str2)

(res1, res2)

(['<tr align="right"><td>1</td>',

'<tr align="right"><td>2</td>',

'<tr align="right"><td>3</td>'],

['1', '2', '3'])

Notice the very different results you get with very similar 12.4.2. Solving crosswords (requires NLTK)¶The following example is adapted from the NLTK Book, Ch. 3. Let’s say we’re in the midst of doing a cross word puzzle and we need an 8-letter word whose third letter is j and whose sixth letter is t which means sad. We emphasize any matching word must be exactly 8 letters long. For “majestic” satisfies the constraints’ “majestically” does not. Make sure your regular expression respects that requirement. (Your regular expression will need to use “^”, the beginning of input marker and “$”, the end of input marker)(, import nltk

print(nltk.__version__)

nltk.download('words')

3.2.5

[nltk_data] Downloading package words to /root/nltk_data...

[nltk_data] Unzipping corpora/words.zip.

True

The output from the correct answer is shown after the cell below. To preserve it for comparison, you can edit the cell after the next one, which is identical. import re

from nltk.corpus import words

wds = words.words()

print(len(wds))

# Put your regex inplace of xxx in the next line

regex = r'xxx'

#235786

cands = [w for w in wds if re.search(regex,w)]

# The correct output is shown below.

cands

236736

['abjectly',

'adjuster',

'dejected',

'dejectly',

'injector',

'majestic',

'objectee',

'objector',

'rejecter',

'rejector',

'unjilted',

'unjolted',

'unjustly']

import re

from nltk.corpus import words

wds = words.words()

print(len(wds))

# Put your regex inplace of xxx in the next line

regex = r'xxx'

#235786

cands = [w for w in wds if re.search(regex,w)]

# The correct output is shown below.

cands

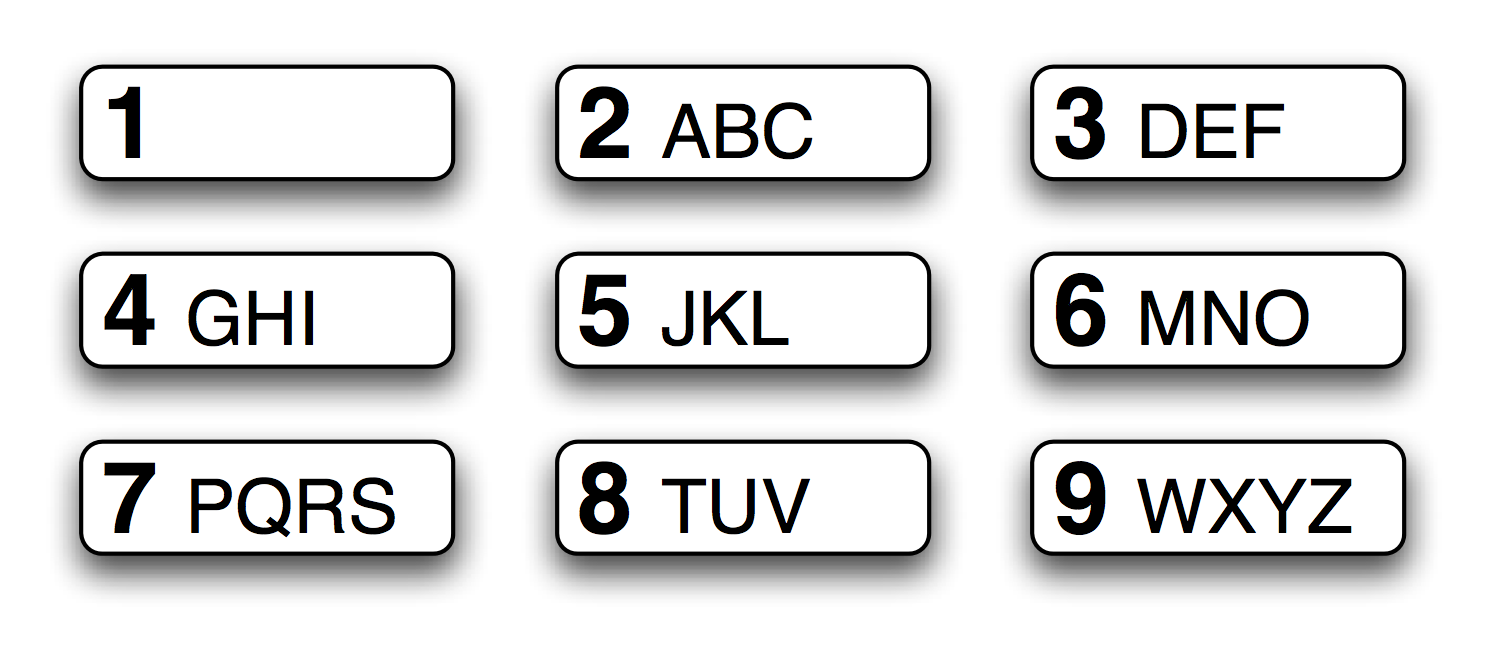

So looking over the candidates, what is the word we want? 12.4.3. Textonyms¶The NLTK Book, Ch. 3introduces the following concept of textonym with this definition: The T9 system is used for entering text on mobile phones: Two or more

words that are ent ered with the same sequence of keystrokes are known

as textonyms. For example, both hole and golf are entered by

pressing the sequence from IPython.core.display import HTML

img_data = 'https://www.nltk.org/images/T9.png'

HTML(f'<img src={img_data} width=500>')

What other words could be produced with the same sequence? Here we could use the regular expression >>> [w for w in wds if re.search('^[ghi][mno][jkl][def]$', w)]

['gold', 'golf', 'hold', 'hole']

Try the following. Find all words that can be spelled out with the

sequence # Put your answer in place of xxx in the line bbeflow

regex = r'xxx'

[w for w in wds if re.search(regex, w)]

[u'dilo', u'film', u'filo']

Now find all words that can be spelled out with the sequence 4653. regex = r'xxx'

[w for w in wds if re.search(regex, w)]

[u'gold', u'golf', u'hold', u'hole', u'gold', u'hole']

12.4.4. Regular expression practice¶import re

pat = r'a|b|c'

pat2 = r'[abc]'

pat3 = r'\w\w\w'

print(pat3)

pat4 = '\\w\\w\\w'

print(pat4)

print(re.match(pat3,'bcd'))

print(re.match(pat3,'1bd'))

print(re.match(pat3,'b1d'))

print(re.match(pat3,'b-d'))

print(re.match(pat3,'b?d'))

print(re.match(pat3,'b d'))

print(re.match(pat3,'bda '))

www www www <re.Match object; span=(0, 3), match='bcd'> <re.Match object; span=(0, 3), match='1bd'> <re.Match object; span=(0, 3), match='b1d'> None None None <re.Match object; span=(0, 3), match='bda'> Edit this cell and after each regular expression, describe the class of strings it matches. Check your answer examining the output of the code cell that follows.

########################################

### Some regular expressions ###

########################################

re2 = r'[a-zA-Z]+' #Any string consisting of ltters of the alphabet, upper or lower case

re3 = r'[A-Z][a-z]+'

re4 = r'\d+(\.\d+)?'

re5 = r'([bcdfghjklmnpqrstvwxyz][aeiou][bcdfghjklmnpqrstvwxyz])*'

re6 = r'\w+|[^\w\s]+'

res = [re2,re3,re4,re5,re6]

########################################

### Some example strings ###

########################################

example1 = 'abracadabra'

example2 = '1billygoat'

example3 = 'billygoat1'

example4 = '43.1789'

example4a = '43x1789'

example5 = '43.'

example6 = '43'

example7 = 'road_runner'

example8 = ' road_runner'

example9 = 'bathos'

example10 = "The little dog laughed to see such a sight."

example11 = 'socrates'

example12 = 'Socrates'

example13 = '*&%#!?'

example14 = 'IBM'

examples = [example1,example2,example3,example4,example4a,example5,example6,

example7,example8,example9,example10,example11,example12,example13,

example14]

########################################

### Trying some matches ###

########################################

for i,re_pat in enumerate(res):

banner = 're%d %s' % (i+2,re_pat)

print()

print(banner)

print('=' * len(banner))

print()

for (i,ex) in enumerate(examples):

match = re.match(re_pat,ex)

if match:

print(' %2d. %-45s %s' % (i+1,ex,ex[match.start():match.end()]))

else:

print(' %2d. %-45s %s' %(i+1,ex,None))

re2 [a-zA-Z]+ ============= 1. abracadabra abracadabra 2. 1billygoat None 3. billygoat1 billygoat 4. 43.1789 None 5. 43x1789 None 6. 43. None 7. 43 None 8. road_runner road 9. road_runner None 10. bathos bathos 11. The little dog laughed to see such a sight. The 12. socrates socrates 13. Socrates Socrates 14. &%#!? None 15. IBM IBM re3 [A-Z][a-z]+ =============== 1. abracadabra None 2. 1billygoat None 3. billygoat1 None 4. 43.1789 None 5. 43x1789 None 6. 43. None 7. 43 None 8. road_runner None 9. road_runner None 10. bathos None 11. The little dog laughed to see such a sight. The 12. socrates None 13. Socrates Socrates 14. *&%#!? None 15. IBM None re4 d+(.d+)? =============== 1. abracadabra None 2. 1billygoat 1 3. billygoat1 None 4. 43.1789 43.1789 5. 43x1789 43 6. 43. 43 7. 43 43 8. road_runner None 9. road_runner None 10. bathos None 11. The little dog laughed to see such a sight. None 12. socrates None 13. Socrates None 14. *&%#!? None 15. IBM None re5 ([bcdfghjklmnpqrstvwxyz][aeiou][bcdfghjklmnpqrstvwxyz]) ============================================================ 1. abracadabra 2. 1billygoat 3. billygoat1 bil 4. 43.1789 5. 43x1789 6. 43. 7. 43 8. road_runner 9. road_runner 10. bathos bathos 11. The little dog laughed to see such a sight. 12. socrates socrat 13. Socrates 14. *&%#!? 15. IBM re6 w+|[^ws]+ ================ 1. abracadabra abracadabra 2. 1billygoat 1billygoat 3. billygoat1 billygoat1 4. 43.1789 43 5. 43x1789 43x1789 6. 43. 43 7. 43 43 8. road_runner road_runner 9. road_runner None 10. bathos bathos 11. The little dog laughed to see such a sight. The 12. socrates socrates 13. Socrates Socrates 14. *&%#!? *&%#!? 15. IBM IBM Make sure you can answer the following questions about the results of testing these regular expressions on the examples:

12.4.5. An example that requires NLTK to be installed¶import nltk

nltk.download('brown')

[nltk_data] Downloading package brown to /Users/gawron/nltk_data...

[nltk_data] Package brown is already up-to-date!

True

To run the code for this example, you will use a balanced corpus of English texts, a corpus collected with the purpose of representing a balanced variety of English text types: fiction, poetry, speech, non fiction, and so on. One relatively well-established, free, and easy-to-get example of such a corpus is the Brown Corpus. Brown is about 1.2 M words. You can import the corpus as follows: ::

>>> from nltk.corpus import brown

If this does not work, it is because you have nltk installed without the

accompanying corpora. You can download any nltk corpus you need through

the ::

>>> import nltk

>>> nltk.download('brown')

You may be told: Package brown is already up-to-date!

or your current nltk install will update >>> from nltk.corpus import brown

The following returns a list of all 1.2 M word tokens in Brown: >>> brown.words()

['The', 'Fulton', 'County', 'Grand', 'Jury', 'said', ...]

# From http://www.nltk.org/book/ch03.html

# Find the most common vowel sequences in English. Note: be patient. Evaluating this may take a while.

from nltk.corpus import brown

from collections import Counter

import re

bw = sorted(set(brown.words()))

# Find every instance of two or more consecutive vowels, and count tokens of each.

# Replace the xxx in the string below to provide your answer (Hint: Use the regex construction

# mean 2 or more matches for the preceding expression.)

regex = r'xxx'

ctr = Counter(vs for word in bw for vs in re.findall(regex,word))

#Correct output shown

ctr.most_common(25)

[('io', 2787),

('ea', 2249),

('ou', 1855),

('ie', 1799),

('ia', 1400),

('ee', 1289),

('oo', 1174),

('ai', 1145),

('ue', 541),

('au', 540),

('ua', 502),

('ei', 485),

('ui', 483),

('oa', 466),

('oi', 412),

('eo', 250),

('iou', 225),

('eu', 187),

('oe', 181),

('iu', 128),

('ae', 85),

('eau', 54),

('uo', 53),

('eou', 52),

('uou', 37)]

The answer is kind of a surprise (surely “ea” and “ou” are more natural than “io”!), but there is no bug. The vowel sequence “io” occurs in more English words than any other vowel sequence. Can you think why? Using the the code above as inspiration, find all the English words containing the vowel sequence “uou”. 12.4.6. Poker examples¶Suppose you are writing a poker program where a player’s hand is represented as a 5-character string with each character representing a card, “a” for ace, “k” for king, “q” for queen, “j” for jack, “t” for 10, and “2” through “9” representing the card with that value. To see if a given string is a valid hand, one could run the code in t he following cell import re

def displaymatch(regex,text):

match = regex.match(text)

if match is None:

matchstring = None

else:

matchstring = '%s[%s]%s' % (text[:match.start()],text[match.start():match.end()],text[match.end():])

print('%-10s %s' % (text,matchstring))

valid = re.compile(r"^[a2-9tjqk]{5}$")

## Some examples

displaymatch(valid, "akt5q") # Valid.

displaymatch(valid, "akt5e") # Invalid.

displaymatch(valid, "akt") # Invalid.

displaymatch(valid, "727ak") # Valid.

displaymatch(valid, "727aka") # Invalid.

displaymatch(valid, "aaaaa") # Invalid.

akt5q [akt5q]

akt5e None

akt None

727ak [727ak]

727aka None

aaaaa [aaaaa]

The hand “727ak” contains a pair, and we would like to recognize such hands as special, so that we can go all in. We can do this using regular expression groups and register references. The match for each parenthesized part of a regular expression is called a group. We can refer back to the particular match associated with a group with :raw-latex:`\integer`. Where integer is any integer from 1 through 9. \1 refers to the first group, \2 to the second, and so on. So to match poker hands with pairs, we do the following. pair = re.compile(r".*(.).*\1.*")

displaymatch(pair,"727ak")

displaymatch(pair,"723ak")

pair.match("727ak").groups()[0]

727ak [727ak]

723ak None

'7'

displaymatch(pair,"a2aak")

pair.match("aa2ak").groups()[0]

a2aak [a2aak]

'a'

Of course, the regex A problem with import re

def displaymatch(regex,text, print_groups=False):

match = regex.match(text)

if match is None:

matchstring = None

else:

matchstring = '%s[%s]%s' % (text[:match.start()],text[match.start():match.end()],text[match.end():])

if print_groups and match:

print('%-10s %s %s' % (text,matchstring,match.groups()))

else:

print('%-10s %s' % (text,matchstring))

# Re for recognizing pair hands

pair = re.compile(r".*(.).*\1")

print("pair")

displaymatch(pair,"723ak",print_groups=True)

displaymatch(pair,"7a3ak",print_groups=True)

print()

## Write your regex for recognizing two pair below. Test

## This version is not adequate. Look at the examples to see why.

print("two pair")

two_pair = re.compile(r".*(.).*(.).*\1.*\2.*")

displaymatch(two_pair,"7a272",print_groups=True)

displaymatch(two_pair,"722a7",print_groups=True) # shd succeed, does not

displaymatch(two_pair,"7722a",print_groups=True) # shd succeed, does not

#displaymatch(two_pair,"7a722",print_groups=True)

#displaymatch(two_pair,"727a2",print_groups=True)

#displaymatch(two_pair,"aaaa2",print_groups=True) # Will succeed on this one, but that's ok

pair

723ak None

7a3ak [7a3a]k ('a',)

two pair

7a272 [7a272] ('7', '2')

722a7 None

7722a None

12.4.7. Questions¶

12.4.8. How to do extraction¶The following example is from

import re

html_string = """

<div class="br10" id="stationSelect">

<a class="br10" id="stationselector_button" href="javascript:void(0);" onclick="_gaq.push(['_trackEvent', 'Station Select', 'Opened']);"><span>Station Select</span></a>

</div>

</div>

<div id="conds_dashboard">

<div id="hour00">

<div id="nowCond">

<div class="titleSubtle">Now</div>

<div id="curIcon"><a href="" class="iconSwitchBig"><img src="http://icons-ak.wxug.com/i/c/k/nt_partlycloudy.gif" width="44" height="44" alt="Scattered Clouds" class="condIcon" /></a></div>

<div id="curCond">Scattered Clouds</div>

</div>

<div id="nowTemp">

<div class="titleSubtle">Temperature</div>

<div id="tempActual"><span id="rapidtemp" class="pwsrt" pwsid="KCASANDI123" pwsunit="english" pwsvariable="tempf" english="°F" metric="°C" value="55.8">

<span class="nobr"><span class="b">55.8</span> °F</span>

</span></div>

<div id="tempFeel">Feels Like

<span class="nobr"><span class="b">55.1</span> °F</span>

</div>

</div>

"""

pattern = r'<div\s+id\s*=\s*\"tempActual\"\s*>.*?(\d{1,3}\.\d).*?</div>'

pattern_re = re.compile(pattern,re.MULTILINE | re.DOTALL)

#m = re.search(pattern_re,html_string)

#m.groups()

pattern_re.findall(html_string)

['55.8']

The pattern in the example above was built up piece by piece. First we

built a regular expression matching the subpattern = r'<div\s+id\s*=\s*\"nowTemp\"\s*>

The corepattern = r'(\d{1,3}\.\d)'

Finally we tested the last part: lastpattern = r`</div>'

12.4.9. Tokenization (NLTK assumed)¶Tokenization is the process of breaking up a text into words. We have in

some cases used There are three tokenizations of

and so on. We apply this pattern to the example string # From http://www.nltk.org/book/ch03.html

import re

text = """

"That," said Fred, "is what

you ... get in the U.S.A. for $5.29."

"""

try1 = text.split()

# Notice the use of special NONCAPTURING parens (?:...)

# All parens in the regexp must be non capturing.

pattern = r"""

(?:[A-Z]\.)+ # abbreviations, e.g. U.S.A.

|\w+(?:-\w+)* # words with optional internal hyphens

|\$?\d+(?:\.\d+)?%? # numbers, money and percents, e.g. 3.14, $12.40, 82%

|\.\.\. # ellipsis

|[][.,;"'?():-_`] # keep punctuation, delimiters as separate word tokens

"""

re_flags = re.UNICODE | re.MULTILINE | re.DOTALL | re.X

pattern_re = re.compile(pattern,re_flags)

try2 = pattern_re.findall(text)

# Or equivalently, let nltk do some of the work.

import nltk

try3 = nltk.regexp_tokenize(text,pattern,flags=re_flags)

try1

['"That,"',

'said',

'Fred,',

'"is',

'what',

'you',

'...',

'get',

'in',

'the',

'U.S.A.',

'for',

'$5.29."']

The In the next two tries, we use such a regular expression (defined as

Next, we call The results of using try3

['"',

'That',

',',

'"',

'said',

'Fred',

',',

'"',

'is',

'what',

'you',

'...',

'get',

'in',

'the',

'U.S.A.',

'for',

'$5.29',

'.',

'"']

try2 == try3

True

Python regular expressions use parentheses for two different things,

defining retrievable groups, which as we saw, is useful for extraction,

and defining the scope of some regular expression operator (like text = """

"That," said Fred, "is what

you ... get in the U.S.A. for $5.29."

"""

# This is illegal, do you know why?

#patx = r'\b\B+\b'

patx = r'\w+'

re_flags = re.UNICODE | re.MULTILINE | re.DOTALL | re.X

patx_re = re.compile(patx,re_flags)

try4 = patx_re.findall(text)

Here is what you get. Is this a good result? try4

['That',

'said',

'Fred',

'is',

'what',

'you',

'get',

'in',

'the',

'U',

'S',

'A',

'for',

'5',

'29']

12.4.10. Sentence boundary detection¶import re

text = """

The king rarely saw Marie

on Tuesdays, but

he did see her on Wednesdays. He liked

to take long walks

in the garden, gazing longingly at the

rhododendrons. She

thought this

odd. Me, too.

"""

lines = re.split(r'\s*[!?.]\s*', text)

lines

['nThe king rarely saw Marie non Tuesdays, butnhe did see her on Wednesdays', 'He likednto take long walksnin the garden, gazing longingly at thenrhododendrons', 'Shenthought thisnodd', 'Me, too', ''] Now let’s clean this up removing unnecessary line breaks and white

space. For each element in sentences0 = [' '.join(line.split()) for line in lines]

sentences = [exp for exp in sentences0 if exp]

sentences

['The king rarely saw Marie on Tuesdays, but he did see her on Wednesdays',

'He liked to take long walks in the garden, gazing longingly at the rhododendrons',

'She thought this odd',

'Me, too']

We wrap it all up in a function, supplying the above pattern as a default if the user doesn’t specify one. def sent_tokenize (text, pat=r'\s*[!?.]\s*'):

lines = re.split(pat, text)

sentences0 = [' '.join(line.split()) for line in lines]

return [exp for exp in sentences0 if exp]

12.4.11. Putting it all together¶In the next cell we use negative lookahead, which allows us to match

an instance of one pattern as long as it is not immediately followed by

an instance of another. For example, using text = 'Isaac Asimov patted Isaac Stern on the back'

print(re.findall(r"Isaac",text))

print(re.findall(r"Isaac(?!\s*Asimov)",text))

['Isaac', 'Isaac']

['Isaac']

We input a raw text string and first tokenize sentences, then words within sentences, returning a list of tokenized sentences. Each tokenized sentence is a list of words. import nltk

import re

pattern = r"""

(?:[A-Z]\.)+ # abbreviations, e.g. U.S.A.

|\$?\d+(?:\.\d+)?%? # numbers, money and percents, e.g. 3.14, $12.40, 82%

|\$?\.\d+%? # numbers, money and percents, e.g. .14, $.40, '/8%

|\w+(?:-\w+)* # words with optional internal hyphens. NB \w includes \d

|\.\.\. # ellipsis

|[][./,;"'!?():-_`] # keep punctuation, delimiters as separate word tokens

"""

re_flags = re.UNICODE | re.MULTILINE | re.DOTALL | re.X

# Add in to our sentence boundary pattern

# that the next letters following the sentence ender

# must NOT be a lower case letter (a-z).

back_pat = '\s*[!?.]\s+(?![a-z])'

def sent_tokenize (text, pat = '\s*[!?.]\s+'):

lines = re.split(pat, text)

sentences0 = [' '.join(line.split()) for line in lines]

return [exp for exp in sentences0 if exp]

text = """

The king rarely saw Marie

on Tuesdays, but

he did see her on Wednesdays. He liked

to take long walks

in the garden, gazing longingly at the

rhododendrons. She

thought this

odd. Me, too.

"That," said Fred, "is what

you (Texans!) get in 1/2 the U.S.A. for $5.29, .23% of nothing."

"""

sents = sent_tokenize(text,pat = back_pat)

tokenized_sents = [nltk.regexp_tokenize(sent, pattern, flags=re_flags)

for sent in sents]

tokenized_sents

[['The',

'king',

'rarely',

'saw',

'Marie',

'on',

'Tuesdays',

',',

'but',

'he',

'did',

'see',

'her',

'on',

'Wednesdays'],

['He',

'liked',

'to',

'take',

'long',

'walks',

'in',

'the',

'garden',

',',

'gazing',

'longingly',

'at',

'the',

'rhododendrons'],

['She', 'thought', 'this', 'odd'],

['Me', ',', 'too'],

['"',

'That',

',',

'"',

'said',

'Fred',

',',

'"',

'is',

'what',

'you',

'(',

'Texans',

'!',

')',

'get',

'in',

'1',

'/',

'2',

'the',

'U.S.A.',

'for',

'$5.29',

',',

'.23%',

'of',

'nothing',

'.',

'"']]

12.4.12. Example of using regular expressions to clean data¶The following is a realistic example of cleaning web data using regular

expressions. It is offered here as a reference, and particularly as an

example using 12.4.12.1. Load recipe data¶Execute the following two commands to either download the data to your machine. You will need to then move it to your Google Drive if you are using Google Colab. Modified from Python Data Science Handbook Jake VenderPlas. section 3.10 # !curl https://s3.amazonaws.com/openrecipes/20170107-061401-recipeitems.json.gz --output 20170107-061401-recipeitems.json.gz

# !gunzip 20170107-061401-recipeitems.json.gz

The next cell loads the data, does some cleanup and packages it into a DataFrame. import pandas as pd

# You will need to change the path to whereever the data is, possibly also mounting your Google Drive.

path = '/Users/gawron/Desktop/src/sphinx/python_for_ss_extras/colab_notebooks/'\

'python-for-social-science/pandas/datasets/20170107-061401-recipeitems.json'

Although try:

recipes = pd.read_json(path)

except ValueError as e:

print("ValueError:", e)

A partial solution is to read in the data line by line. There are still some minor cleanup issues. The following cell loads the data and deals with those issues. import json

def fix_dd (dd):

to_do = []

for (key,val) in dd.items():

if isinstance(val,dict) and len(val) == 1:

to_do.append((key, val[list(val.keys())[0]]))

elif isinstance(val,dict)and len(val) == 0:

print('***Zero-keyed value found***')

elif isinstance(val,dict):

print('***Multi-keyed value found***')

for (key,new_val) in to_do:

# overwrite k1 -> {k2:v} to be k1 -> v

dd[key] = new_val

return dd

with open(path) as f:

recipes = pd.DataFrame(fix_dd(json.loads(line.strip())) for line in f)

recipes.shape

(173278, 17)

We see there are nearly 200,000 recipes, and 17 columns. Let’s take a look at one row to see what we have: recipes.iloc[0]

_id 5160756b96cc62079cc2db15 name Drop Biscuits and Sausage Gravy ingredients Biscuitsn3 cups All-purpose Flourn2 Tablespo... url http://thepioneerwoman.com/cooking/2013/03/dro... image http://static.thepioneerwoman.com/cooking/file... ts 1365276011104 cookTime PT30M source thepioneerwoman recipeYield 12 datePublished 2013-03-11 prepTime PT10M description Late Saturday afternoon, after Marlboro Man ha... totalTime NaN creator NaN recipeCategory NaN dateModified NaN recipeInstructions NaN Name: 0, dtype: object Here is the recipe with the longest ingredient list: import numpy as np

test = np.argmax(recipes.ingredients.str.len())

testname = recipes.name[test]

testname

'Carrot Pineapple Spice & Brownie Layer Cake with Whipped Cream & Cream Cheese Frosting and Marzipan Carrots'

Here’s a sample of what the ingredients list (from that one row/recipe) looks like: recipes.ingredients[test][:150]

'1 cup carrot juice2 cups (280 g) all purpose flour1/2 cup (65 g) almond meal or almond flour1 tablespoon baking powder1 teaspoon baking soda3/4 teaspo'

Obviously a lot of distinct ingredients have been concatenated together without spacing, making the whole mess quite hard to read. This is the sort of thing that can happen any time when web-scraping, but is especially trying with social media data. To clean this up we want 1 cup carrot juice2 cups (280 g) all purpose flour1/2 cup (65 g) almond meal or almond flour

to come out as 1 cup carrot juice

2 cups (280 g) all purpose flour

1/2 cup (65 g) almond meal or almond flour

So we need to split the string at certain points. Let’s try to exploit the fact that an ingredient specification tends to start with a number. So we split at the point where a number is concatenated onto a non-number. To define the split points we’ll use a regular expression. A not-quite-perfect regexp to split the recipe ingredients for this LONG

list of ingredients is given in the next cell as After the splitting, we find numerous duplicates; this may have been caused by interrupted data transmission at some point during the scraping, but it is also the sort of thing that happens when scraping social media data, which often has complicated structure, and may have duplications at the source due to reposting and gatekeeper interventions. With duplicates removed our example ingredients list shortens considerably. import re

spl_re_raw = r"""

(?<=[a-z\)]) # Look behind for alphabetic character or right paren

\n? # Match line break or empty string

(?=[1-9]) # Look ahead for any digit 1-9

"""

re_flags = re.UNICODE | re.MULTILINE | re.DOTALL | re.X

spl_re = re.compile(spl_re_raw,re_flags)

raw_ingreds= re.split(spl_re,recipes.ingredients[test])

#remove dupes!

test_ingreds = set(raw_ingreds)

# There were lots of dupes!

print(' With duplicates: ',len(raw_ingreds),'ingredients\n',

'Without duplicates: ',len(test_ingreds), 'ingredients',

end='\n\n')

# print each of the ingredients after splitting and dupe removal, separated by newlines

print(*test_ingreds,sep='\n')

With duplicates: 277 ingredients

Without duplicates: 37 ingredients

2 teaspoon vanilla extract

1/2 teaspoon almond extract

1/2 cup unsweetened shredded coconutgreen and yellow liquid food coloring

green and yellow liquid food coloring

24 oz (3 bricks) full fat cream cheese

1 vanilla bean

1/2 teaspoon baking powder

1 teaspoon vanilla extract

1/4 teaspoon ground allspice

2 cups (280 g) all purpose flour

1 cup (160 g) finely chopped fresh pineapple

1 cup carrot juice

1/2 teaspoon ground nutmeg

1 lbs (450 g) carrots, finely freshly grated

1/2 cup (65 g) almond meal or almond flour

3 large eggs

1/2 cup (113 g or 1 stick) unsalted butter

85 g to 115 g (3/4 to 1 cup) confectioners’ sugar (powdered sugar)red, yellow and green gel food colorinh

2 cups (475 mL) heavy whipping cream, cold

1 tablespoon baking powder

1 teaspoon ground cinnamon

1/2 teaspoon ground ginger

1 tablespoon light corn syrup

1 1/2 cup (175 g) confectioners’ sugar (powdered sugar)

85 g to 115 g (3/4 to 1 cup) confectioners’ sugar (powdered sugar)red, yellow and green gel food colorinh

red, yellow and green gel food colorinh

3/4 cup (175 mL) neutral flavored cooking oil (like sunflower)

2 cups (400 g) white granulated sugar

1/4 teaspoon sea salt

3/4 teaspoon fine sea salt

1/4 teaspoon fresh ground black pepper

4 oz (115 g) semisweet chocolate

1/4 teaspoon ground cardamom

1 teaspoon baking soda

2 oz (57 g) unsweetened chocolate

3/4 cup (150 g) granulated white sugar

57 g (2 oz) almond paste

3/4 cup (105 g) all purpose flour

1/4 teaspoon ground cloves

Let’s package this up in a function that reinserts line breaks between

the chunks found by import re

#spl_re = re.compile(r'(?<=[a-z\)])\n?(?=[1-9])')

spl_re_raw = r"""

(?<=[a-z\)]) # Look behind for alphabetic character or right paren

\n? # Match line break or empty string

(?=[1-9]) # Look ahead for any digit 1-9

"""

re_flags = re.UNICODE | re.MULTILINE | re.DOTALL | re.X

spl_re = re.compile(spl_re_raw,re_flags)

def make_parsable (ingred_str):

# We cant use `set` for dupe removal because well-formed

# ingredient lists often have internal structure (e.g., ingredients listed

# in order of usage or all spices go together)

def remove_dupes (container):

seen,res = set(), []

for elem in container:

if elem not in seen:

res.append(elem)

seen.add(elem)

return res

# After splitting rejoin with newlines

str1 = '\n'.join(re.split(spl_re,ingred_str))

# Because there were were "\n"s in ingred_strings

# before the 1st split this 2nd split may not just undo the join

return '\n'.join(remove_dupes(str1.split('\n')))

recipes.ingredients = recipes.ingredients.apply(make_parsable)

We reprint the previous longest-ingredients list, to show that elements in the new ingredients column now print nicely. A close look will also show this solution is not perfect, precisely because there are ingredient specifications that do not start with a number. print(recipes.iloc[test].ingredients)

1 cup carrot juice

2 cups (280 g) all purpose flour

1/2 cup (65 g) almond meal or almond flour

1 tablespoon baking powder

1 teaspoon baking soda

3/4 teaspoon fine sea salt

1 teaspoon ground cinnamon

1/2 teaspoon ground ginger

1/2 teaspoon ground nutmeg

1/4 teaspoon ground allspice

1/4 teaspoon ground cloves

1/4 teaspoon ground cardamom

1/4 teaspoon fresh ground black pepper

2 cups (400 g) white granulated sugar

3/4 cup (175 mL) neutral flavored cooking oil (like sunflower)

1 lbs (450 g) carrots, finely freshly grated

1 cup (160 g) finely chopped fresh pineapple

3 large eggs

2 teaspoon vanilla extract

1/2 teaspoon almond extract

2 oz (57 g) unsweetened chocolate

4 oz (115 g) semisweet chocolate

1/2 cup (113 g or 1 stick) unsalted butter

3/4 cup (105 g) all purpose flour

1/2 teaspoon baking powder

1/4 teaspoon sea salt

3/4 cup (150 g) granulated white sugar

2 cups (475 mL) heavy whipping cream, cold

24 oz (3 bricks) full fat cream cheese

1 teaspoon vanilla extract

1 vanilla bean

1 1/2 cup (175 g) confectioners’ sugar (powdered sugar)

57 g (2 oz) almond paste

1 tablespoon light corn syrup

85 g to 115 g (3/4 to 1 cup) confectioners’ sugar (powdered sugar)red, yellow and green gel food colorinh

red, yellow and green gel food colorinh

1/2 cup unsweetened shredded coconutgreen and yellow liquid food coloring

green and yellow liquid food coloring

After these changes, the identity of the most complicated recipe has changed. test2 = np.argmax(recipes.ingredients.str.len())

recipes.name[test2]

'coq au vin'

Inspection of this “ingredient list” shows our cleanup task is not done. This data may have the recipe itself concatenated into the ingredients. A task for another day. print(recipes.ingredients[test2])

All of this is; her recipes are always ridden with steps that make you question her sanity, as well as yours for following them — for example, this one requests that you boil bacon, which some might remember caused

some hilarious righteous indignation:

A 3- to 4-ounce chunk of bacon

A heavy, 10-inch, fireproof casserole

2 tablespoons butter

2 1/2 to 3 pounds cut-up frying chicken

1/2 teaspoon salt

1/8 teaspoon pepper

1/4 cup cognac

3 cups young, full-bodied red wine such as Burgundy, Beaujolais, Cotes du Rhone or Chianti

1 to 2 cups brown chicken stock, brown stock or canned beef bouillon

1/2 tablespoon tomato paste

2 cloves mashed garlic

1/4 teaspoon thyme

1 bay leaf

12 to 24 brown-braised onions (recipe follows)

1/2 pound sautéed mushrooms (recipe follows)

Salt and pepper

3 tablespoons flour

2 tablespoons softened butter

Sprigs of fresh parsley

1. Remove the rind of and cut the bacon into lardons (rectangles 1/4-inch across and 1 inch long). Simmer for 10 minutes in 2 quarts of water. Rinse in cold water. Dry. [Deb note: As noted, I'd totally skip this step next time.]

2. Sauté the bacon slowly in hot butter until it is very lightly browned. Remove to a side dish.

3. Dry the chicken thoroughly. Brown it in the hot fat in the casserole.

4. Season the chicken. Return the bacon to the casserole with the chicken. Cover and cook slowly for 10 minutes, turning the chicken once.

5. Uncover, and pour in the cognac. Averting your face, ignite the cognac with a lighted match. Shake the casserole back and forth for several seconds until the flames subside.

6. Pour the wine into the casserole. Add just enough stock or bouillon to cover the chicken. Stir in the tomato paste, garlic and herbs. Bring to the simmer. Cover and simmer slowly for 25 to 30 minutes, or until the chicken is tender and its juices run a clear yellow when the meat is pricked with a fork. Remove the chicken to a side dish.

7. While the chicken is cooking, prepare the onions and mushrooms (recipe follows).

8. Simmer the chicken cooking liquid in the casserole for a minute or two, skimming off the fat. Then raise the heat and boil rapidly, reducing the liquid to about 2 1/4 cups. Correct seasoning. Remove from heat and discard bay leaf.

9. Blend the butter and flour together into a smooth paste (buerre manie). Beat the paste into the hot liquid with a wire whip. Bring to the simmer, stirring, and simmer for a minute or two. The sauce should be thick enough to coat a spoon lightly.

10. Arrange the chicken in the casserole, place the mushrooms and onions around it and baste with the sauce. If this dish is not to be served immediately, film the top of the sauce with stock or dot with small pieces of butter. Set aside uncovered. It can now wait indefinitely.

11. Shortly before serving, bring to the simmer, basting the chicken with the sauce. Cover and simmer slowly for 4 to 5 minutes, until the chicken is hot enough.

12. Sever from the casserole, or arrange on a hot platter. Decorate with spring for parsley.

For 18 to 24 peeled white onions about 1 inch in diameter:

1 1/2 tablespoons butter

1 1/2 tablespoons oil

A 9- to 10-inch enameled skillet

1/2 cup of brown stock, canned beef bouillon, dry white wine, red wine or water

Salt and pepper to taste

A medium herb bouquet: 3 parsley springs, 1/2 bay leaf, and 1/4 teaspoon thyme tied in cheesecloth

A 10-inch enameled skillet

1 tablespoon oil

1/2 pound fresh mushrooms, washed, well dried, left whole if small, sliced or quartered if large

1 to 2 tablespoons minced shallots or green onions (optional)

|